This CSS art is made by a tricky croping CSS effect. The tricky part is to make a leaf. But the hardest part is to calculate the correct math arguments. This CSS art is scalable. You may change the width and height to have different size of flow:

Taichi Logo in CSS

A Taichi logo made with pure CSS, codepen link:

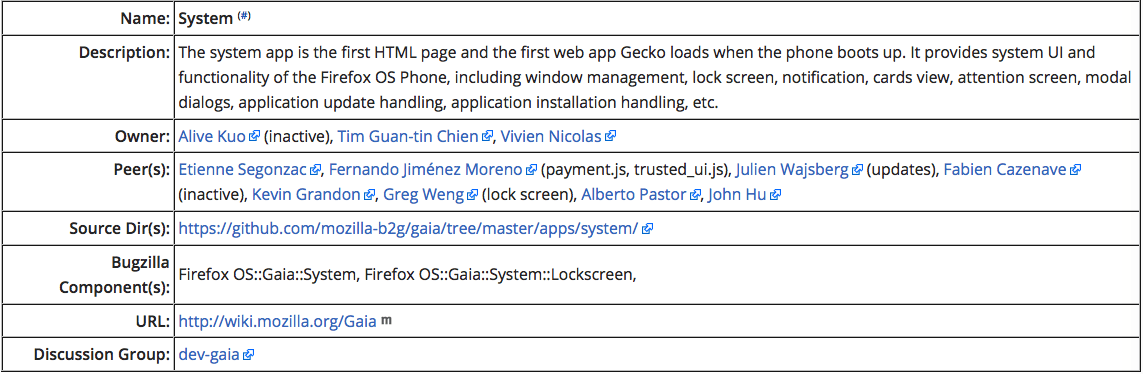

System App Peer

John Hu had became a peer of system app. I am responsible for TV system app since I had worked on the first version of TV gaia. It’s my honor to be part of system app.

How to disable shortcuts at android without rooted?

It’s so nice to see android has keyboard shortcuts feature with keyboard attached. But it may be a little annoying that we want to use those keys to do what we want. John Hu had created another open source project called shun-feng-er which is a tool for eye impaired person. During this project, we need to override the behavior of those shortcuts.

As per discussions and here, it is not possible to disable it or overide it at app level. The solution I found is to use Xposed Framework to inject code at WindowManagerPolicy. This needs a rooted device. But it doesn’t make sense to ask an user with hardware keyboard to have a rooted device.

After some investigations, I found that there is a keyboard layout config called “apple wireless keyboard”. It had change the meta key to home key. The most magic part is all shortcuts related to meta key are disabled, like META + C, META + TAB, etc That’s amazing. So, I try to find how it does.

The main part is to remap the key, it only remaps META key to HOME key. This is its configuration:

# remap meta key

map key 125 F12

map key 126 F12

# remap alt key

map key 56 F11

map key 100 F11

We need to change the keyboard layout once connected. It can be made at Settings app -> Language and input -> Physical keyboard -> (tap it and choose new mapping config)

Please find the whole patch at https://github.com/john-hu/shun-feng-er/commit/a2baf65e0407f294534560278b72474cb9f9dc13.

Different Indentation on Different Languages in Sublime Text

While writing programs in pyopencl, we should face the indentation of python and CL. According to the coding convention, python uses 4 spaces as the indentation and C uses 2 spaces as the indentation. So, we may want to configure our IDE to have different indentation on different programming languages.

As a user of Sublime Text, I found that the Syntax Specific is the correct place to configure it. If we want to configure C to use 2 spaces as its default indentation, we can do the followings:

- Open/create a C file

- Open Syntax Specific at Preferences -> Settings-More -> Syntax Specific

- use the following content as the opened file which should be C++.sublime-settings:

{

"tab_size": 2,

"translate_tabs_to_spaces": true

}

It’s so cool to have that.